News

- September, 2017: Full video/audio dataset and eyetracking data available (see Downloads).

- April, 2017: Web launched.

- May, 2016: Paper available (see Downloads).

Abstract

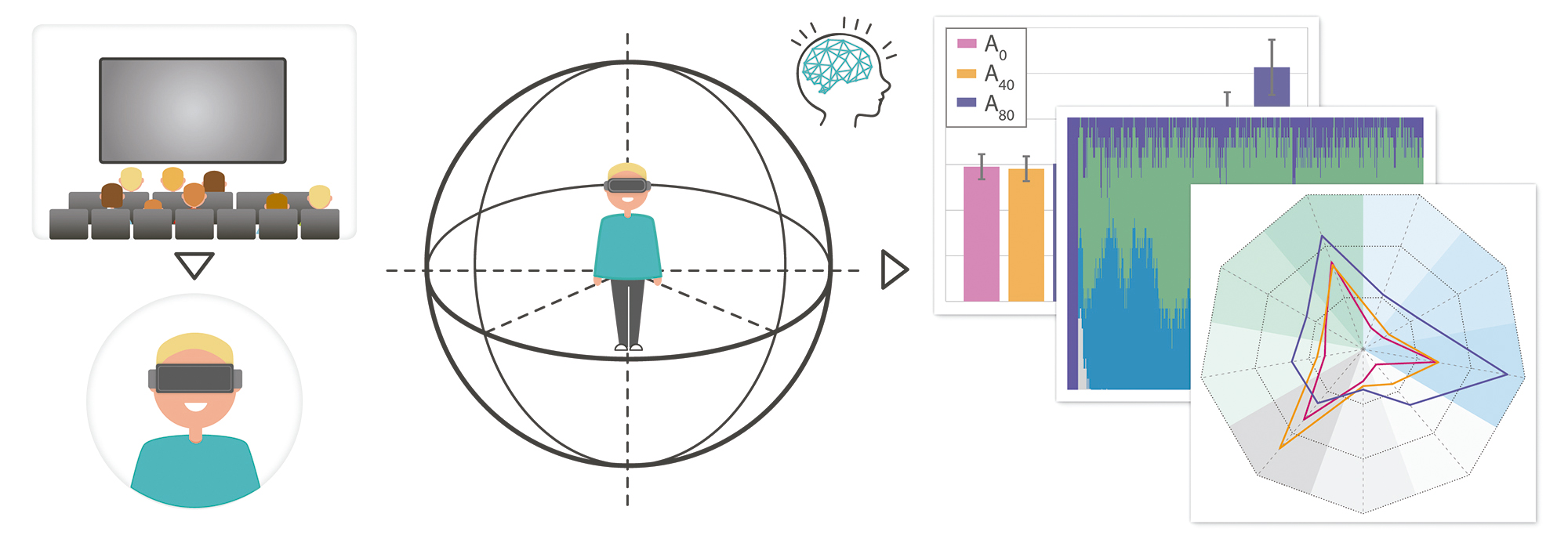

Traditional cinematography has relied for over a century on a well-established set of editing rules, called continuity editing, to create a sense of situational continuity. Despite massive changes in visual content across cuts, viewers in general experience no trouble perceiving the discontinuous flow of information as a coherent set of events. However, Virtual Reality (VR) movies are intrinsically different from traditional movies in that the viewer controls the camera orientation at all times. As a consequence, common editing techniques that rely on camera orientations, zooms, etc., cannot be used. In this paper we investigate key relevant questions to understand how well traditional movie editing carries over to VR, such as: Does the perception of continuity hold across edit boundaries? Under which conditions? Do viewers’ observational behavior change after the cuts? To do so, we rely on recent cognition studies and the event segmentation theory, which states that our brains segment continuous actions into a series of discrete, meaningful events. We first replicate one of these studies to assess whether the predictions of such theory can be applied to VR. On a next stage, we gather gaze data from viewers watching VR videos containing different edits with varying parameters, and provide the first systematic analysis of viewers’ behavior and the perception of continuity in VR. From this analysis we make a series of relevant findings; for instance, our data suggests that predictions from the cognitive event segmentation theory are useful guides for VR editing; that different types of edits are equally well understood in terms of continuity; and that spatial misalignments between regions of interest at the edit boundaries favor a more exploratory behavior even after viewers have fixated on a new region of interest. In addition, we propose a number of metrics to describe viewers attentional behavior in VR. We believe the insights derived from our work can be useful as guidelines for VR content creation.

Downloads

- Paper [PDF, 24.8 MB]

- Supplementary [ZIP, 132.48 MB]

- Slides [PPTX, 301 MB]

- Code and data [ZIP, 266.44 MB]

- Full video/audio dataset and eyetracking data [shared folder]

The code and dataset provided are property of Universidad de Zaragoza - free for non-commercial purposes

Bibtex

Related

Acknowledgements

We would like to thank Paz Hernando and Marta Ortin for their help with the experiments and analyses. We would also like to thank CubeFX for allowing us to reproduce Star Wars - Hunting of the Fallen, and Abaco digital for helping us record the videos for creating the stimuli, as well as Sandra Malpica and Victor Arellano for being great actors. This research has been partially funded by an ERC Consolidator Grant (project CHAMELEON), and the Spanish Ministry of Economy and Competitiveness (projects TIN2016-78753- P, TIN2016-79710-P, and TIN2014-61696-EXP). Ana Serrano was supported by an FPI grant from the Spanish Ministry of Economy and Competitiveness. Diego Gutierrez was additionally funded by a Google Faculty Research Award and the BBVA Foundation. Gordon Wetzstein was supported by a Terman Faculty Fellowship, an Okawa Research Grant, and an NSF Faculty Early Career Development (CAREER) Award.